Supporting Math MTSS through SpringMath: FAQs

Supporting Math MTSS through SpringMath: FAQs

Getting to know SpringMath FAQs | SpringMath MTSS Guide

Evidence-base FAQs

Is SpringMath an evidence-based tool?

Yes. Two randomized controlled trials studying the efficacy of SpringMath were submitted to the National Center on Intensive Intervention (NCII) for rating on their academic interventions tools chart. NCII technical experts evaluate studies of academic intervention programs for quality of design and results, quality of other indicators, intensity, and additional research. The studies of SpringMath earned the highest possible rating for one study, and one point below the highest possible rating for the second study submitted. Additionally, SpringMath is listed as an evidence-based intervention by the University of Missouri Evidence-Based Intervention Network. SpringMath is also listed as an evidence-based resource by the Arizona Department of Education.

There are different levels of evidence, and some sources set forth by commercial vendors as “evidence” would not be considered evidence by our SpringMath team. We prioritize peer-reviewed published research studies in top-tier academic journals using rigorous experimental designs and a program of research that addresses novel questions and conducts systematic replication. SpringMath meets the most rigorous standards for evidence, including a transparent theory of change model for which each claim has been evaluated. The assessments and decision rules have met the most rigorous standards for reliability and decision accuracy. Intervention efficacy has been evaluated in three randomized, controlled trials, one of which was conducted by an independent research team not associated with the author or publisher of SpringMath. Additionally, the first two RCTs were published in three articles in top-tier, peer-reviewed academic journals. The third RCT was completed in 2021 and has not yet been published, but SpringMath earned the strongest effect size on math achievement among intervention tools included in that study. Additional published research has demonstrated an extremely low cost- to- benefit ratio (or stated another way, a strong return on investment), reduced risk, systemic learning gains, and closure of opportunity (or equity) gaps via SpringMath. Drs. VanDerHeyden and Muyskens maintain an ongoing program of research aimed at continually evaluating and improving SpringMath effects in schools.

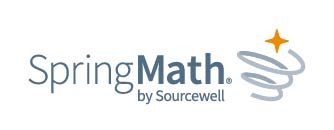

COVID provided a natural experiment for how districts struggling with math achievement can accelerate learning.

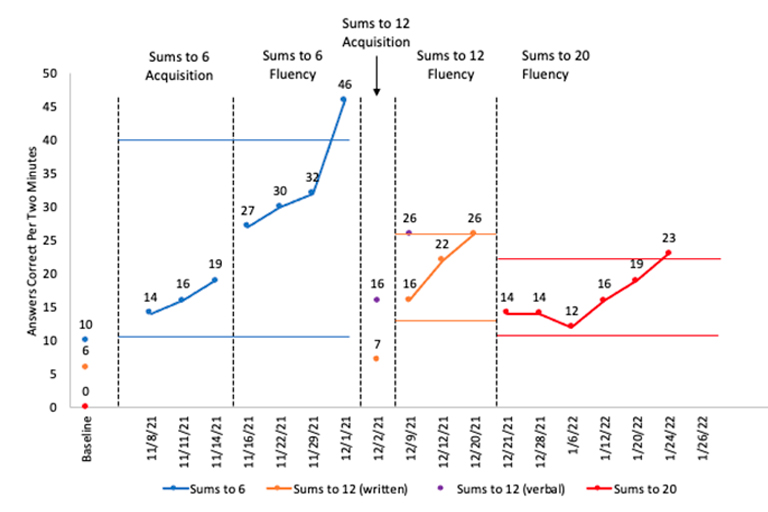

In districts using SpringMath before COVID closures, we have seen rapid recovery of learning loss. For example, these data are from all second graders in a district in Michigan. One can see that scores were lower at winter screening following COVID school closures in the preceding spring. But one can also see that post-classwide-math-intervention, percent proficient was comparable to the years preceding COVID, which is powerful evidence of learning loss recovery.

Screening occurs three times per year. The first two screenings (fall and winter) are primarily the basis for identifying classes in need of classwide intervention and/or students in need of individual intervention. The spring screening is primarily used for program evaluation (along with the fall and winter screening data). Generally, students who require intervention are identified by the fall and winter screenings, and the only “new” cases detected at spring screening are students who did not participate in previous screenings and did not participate in classwide intervention. For classes routed into classwide intervention, the classwide intervention data are used to identify students for more intensive intervention.

The SpringMath screening measures are intentionally efficient. They are group administered and take about 15 minutes each season. If your class is recommended for classwide intervention, 15-20 minutes per day is sufficient to complete classwide intervention, and the recommended dosage is a minimum of four days per week. Day 5 can fall on any day of the week. Individual interventions (which can be recommended from classwide intervention or directly from screening when there is not a classwide learning problem), require 15-20 minutes per day. If students are recommended for individual intervention, recommendations will be made for small groups from week to week, which allows for a single investment of 20 minutes to deliver intervention to several students at the same time. We recommend that teachers and data team members (e.g., principals, RTI coaches, math coaches) log in to the SpringMath coach dashboard each week to identify what actions are underway and where assistance is needed to improve results. The coach dashboard makes specific recommendations about which teachers require assistance; summarizes learning gains at the grade, teacher, and student level; allows for coaches to share data with parents; and provides an ideal tool to facilitate data team meetings in the school.

One of the lessons from MTSS research is that supplemental interventions can be much shorter than 45 minutes, but sessions need to occur more often than twice per week. In fact, exciting contemporary dosage research is finding in rigorous experimental studies that briefer, more frequent intervention sessions (i.e., micro-dosing) produce stronger and more enduring learning gains (Duhon et al., 2020; Schutte et al., 2015; Solomon et al., 2020). Codding et al. (2016) directly examined this question for SpringMath intervention in a randomized, controlled trial and found that given the same weekly investment of minutes allocated to intervention, the condition that offered the greatest number of sessions per week (with shorter sessions) produced about twice the effect of the twice weekly condition, which was about twice the effect of the weekly condition, which was no different from students who received no intervention (i.e., control).

Given recent experimental findings related to dosage, it is becoming clear that daily dosing of intervention or at least four days per week is likely ideal, not just for SpringMath, but for all supplemental and intensive academic interventions, as measured by producing strong learning gains. That said, schools that are trying to implement within a schedule that has already been set can find ways to approximate more effective dosing schedules. For example, if students are available twice per week, teachers might try running two consecutive sessions each time. Teachers might find a way to add a third session during the week either via creative scheduling or working with the core classroom teacher to deliver classwide intervention to the entire class as part of the day’s fluency-building routine in the classroom. We recommend that schools use their planning for year 2 implementation to build schedules that are consistent with optimal dosing in MTSS, which means four or five days per week of shorter supplementation blocks (e.g., 20 minutes).

This question is often a sign to our teams that the instructional expectations, sequencing, and pacing of math learning in a system may be out of sync with best practices. The SpringMath measures have been designed to reflect current learning expectations and when systems report that such content has not yet been taught, this is often a sign that the pacing of instruction in a system may be off track. Let’s answer this question in three parts: screening, classwide intervention, and individual intervention. For part one, let’s focus on screening. Screening skills have been selected for maximal detection of risk. These skills are those that are expected to have been recently taught, that students must be able to do accurately and independently to benefit from the upcoming grade-level instruction. High-leverage skills are emphasized, and mastery is not expected. Screening skills are rigorous, and we expect that more learning will occur with those skills. Our instructional target used at screening reflects successful skill acquisition. There is flexibility for schools to choose when to screen, and this allows some flexibility if you know you have not yet taught a skill that will be assessed but anticipate teaching that skill in the next week or two. It is fine to delay winter and spring screening for a few weeks into each screening window.

Now for part two to this answer: Let’s focus on classwide intervention. There is a set sequence of skills unique to each grade level that classes must progress through. The sequence is intentionally designed to begin with below-grade-level foundation skills and to increase incrementally in difficulty such that mastery on skills that appear early in the sequence prepares students for mastery on the later skills. This sequencing is used because classwide math intervention is, by design, a fluency-building intervention. You might have noticed (or will notice) that when the median score in classwide intervention is below the instructional target, which we call the frustrational range, a whole-class acquisition lesson is provided so that the teacher can easily pause and reteach that skill before proceeding with the classwide fluency-building intervention. There is flexibility here in that teachers can enter classwide intervention at any time during the year (following fall or winter screening) and can enter scores daily until the class median is below mastery. When the class median is below mastery, that is the sweet spot within which to begin classwide math intervention for maximal efficacy and efficiency.

Part three of this answer is about individual intervention. SpringMath screenings are the dropping-in points to intervention. Screening skills reflect rigorous, grade-level content. When a student is recommended for individual assessment and intervention, the assessment will begin from the first screening skill and sample back through incrementally prerequisite skills to find the skill gap or gaps that must be targeted to bring the student to mastery on the grade-level skill (which is the screening skill). There is a science to this process, and the skill that should be targeted is based on student assessment data. There is flexibility for teachers in that they might use the instructional calendars in Support as a model of pacing and a logical organizer to locate acquisition lessons, practice sheets, games, and word problems, which are available for all 145 skills in SpringMath. For convenience, we have also recommended booster lessons and activities in Support for teachers who wish to do more than just classwide math intervention. In other words, teachers can always do more, but it is sufficient to simply do what is recommended within the teacher and coach dashboards.

SpringMath is a comprehensive multi-tiered system of support (MTSS). MTSS begins with universal screening to identify instructional need. These needs can range from an individual to a schoolwide level. If a curricular gap is identified, classwide intervention is the most efficient level at which to address these needs. Thus, all students need to be included in SpringMath. The screening data are used to summarize learning gains within and across classes and grades and to identify areas of need at the school level via automated program evaluation. These important actions of SpringMath and MTSS are not possible when all students are not included in the implementation. Many systems wonder if they can use an existing screening tool to identify students for intervention and then just use SpringMath to deliver intervention. This is a costly mistake because SpringMath continually harvests data to make more accurate decisions about who requires intervention and what kind of intervention they require based on the foundation of universal data collected three times per year.

Classwide intervention is a very powerful tactic when it is needed. It is an inefficient tactic when it is not needed. With SpringMath, you are not buying classwide math intervention. Rather, you are buying a comprehensive MTSS system that seamlessly links instructional intensifications to assessment data using automated data interpretation and summary reports and guides your MTSS implementation step by step. All students must be included in the assessments at routine timepoints to verify core program effectiveness, to detect students who are lagging behind expectations in a highly sensitive way, and to guide effective reparation of learning gaps. When classwide intervention is not recommended, that is because it is not needed as a second screening gate, nor as a mechanism to reduce overall risk (because risk is low). Using the automated summary reports and data interpretation, SpringMath will then very accurately recommend students for small-group or individual intervention. The summary reports, including the coach and administrator dashboard, harvest data from all students to allow for progress monitoring of math learning at the system level. This is not possible when SpringMath is not used universally. It is also not possible to use other screening measures to determine who should get intervention in SpringMath because other measures are not as sensitive as those used in SpringMath. Further, SpringMath screening measures are the “dropping-in” points for diagnostic assessment to provide the right interventions for students who need them. Summary reports will show you the growth of all students across screening measures over the course of the school year and gains for students receiving tier 2 or 3 interventions relative to the performance of classroom peers on the same measures. Again, this is not possible when not all students are included in the assessments. SpringMath assessment and expert layered decision rules recommend — and then provide — precisely what is needed, ensuring the correct stewardship of limited resources and the best return on investment for the effort.

If your class has already started classwide intervention based upon the results of a prior screening you will be allowed to continue classwide intervention if you choose, even if your class scores above the criterion for recommending classwide intervention on subsequent screenings.

Teachers often express this concern. The short answer is “No, it’s not a waste of time for high-performing students to participate in classwide math intervention.” Classwide intervention requires only about 15-20 minutes per day, and children in all performance ranges tend to grow well. If many children score in the frustrational range, an acquisition lesson will be recommended (and provided) right in the classwide intervention teacher dashboard. Students in the instructional and mastery range show strong upward growth given well-implemented classwide intervention. When the median reaches mastery, the entire class is moved up to the next skill, which ensures that students who are already in the mastery range do not remain on that skill for very long. Additionally, for higher-performing students, classwide intervention is a useful cumulative review tactic that has been associated with long-term skill retention and robust, flexible skill use. Thus, it has been demonstrated that even higher-performing students grow well with classwide math intervention, both in SpringMath and in other peer-mediated learning systems.

The decision rule to move children to the next skill is written such that most of your remaining students should be above the instructional target (meaning they have acquired the skill but have not yet reached the level of proficiency that forecasts generalized, flexible use of that skill). Because the skills often overlap within a grade level and across grade levels in increasing increments of difficulty, it is a low-stakes event to move students forward who are in the instructional range as they are likely to experience continued skill development support and to demonstrate growth and even future skill mastery on subsequent skills. In contrast, children who remain in the frustrational range when the class has moved on to a new skill are highly likely to be recommended for individual intervention. That decision occurs in the background and has a very high degree of accuracy, such that children who require individual intervention are not missed (we call this “sensitivity”). Thus, when SpringMath recommends that you move on to a new skill, you should feel confident moving your class on to the next skill. SpringMath continues to track individual student progress and will recommend intervention for any child who really requires additional support to succeed in grade-level content. That said, when considering student progress, users should pay particular attention to the metric on the dashboard called “Scores Increasing.” You should aim to move this value greater than 80% each week as it reflects the overall growth of all your students from week to week and reflects that even your lowest-performing student beat his or her score from the preceding week. If you have an additional 15-20 minutes to supplement students and/or a teacher or coach to help you differentiate during core instruction, you can provide additional instruction via acquisition lessons, games, and practice materials. These materials are available in the support portal and can be used with students who were most recently not at mastery when the class moved on to a subsequent skill. Again, working with students who did not attain mastery in this way is not technically necessary since SpringMath will recommend individual intervention and deliver the right protocol for you when it’s truly necessary for individual students.

All students at times and especially students who experience mathematics difficulties may also have difficulties with attention, motivation, self-regulation, and working memory (Compton, Fuchs, Fuchs, Lambert, & Hamlett, 2012). Unfortunately, executive-function-focused interventions have not been shown to improve achievement. Yet, supporting executive-function difficulties within academic interventions can enable more successful academic intervention. For example, Burns, et al. (2019) found that intervention based on student assessment produced strong effects on multiplication learning gains that were independent of working memory, whereas simple flashcard practice did not. Thus, ideally, intensive interventions provide math instruction in ways that support self-regulation and reinforcement strategies, minimize cognitive load on working memory, minimize excessive language load by incorporating visual representations, and provide fluency practice (Fuchs, Fuchs, & Malone, 2018; Powell & Fuchs, 2015).

In SpringMath, diagnostic assessment is used to verify mastery of prerequisite understandings and determine the need for acquisition support versus fluency-building support so that a student is placed into the correctly aligned intervention skill (task difficulty) and receives the aligned instructional support according to their needs. Concrete-representational-abstract sequencing is used within and across intervention protocols, and students are provided with multiple response formats (e.g., oral, written, completing statements and equations to make them true, finding and correcting errors). Tasks are broken down into manageable chunks and new understandings are introduced using skills students have already mastered. Fluency of prerequisite skills is verified and, if needed, developed to ease learning of more complex content. Weekly progress monitoring and data-driven intervention adjustments ensure that the intervention content and tactic remain well aligned with the student’s needs. Intervention scripts provide goals to students and emphasize growth and beating the last best score. Self-monitoring charts are used for classwide and individual intervention, and rewarding gains is recommended. Sessions are brief but occur daily for optimal growth (Codding et al., 2016). These features make the learning experience productive, engaging, and rewarding to the student. The coach dashboard tracks intervention use and effects throughout the school. When needed, SpringMath alerts the coach to visit the classroom for in-class coaching support to facilitate correct intervention to promote student learning gains and student motivation for continued growth. Several accommodations are permitted, including adjusting response formats, reading problems to students, and repeating assessments.

(My students have processing disorders so they will never reach mastery if I don’t extend their time.)

We understand the pressure teachers feel to move through content quickly, but moving students on to the next skill before they have attained mastery on the prerequisite skill will lessen their chances of mastering future content. In one district, in classes in which students moved on without having reached the mastery criterion, the probability of mastering any additional content plummeted to 35%. In classes that required students to reach mastery before moving on, probability of future skill mastery was 100%. Further, classes that took the time to make sure students attained mastery actually covered more content because they were able to reach mastery much more quickly on later skills.

Thus, we strongly recommend that you make the investment to bring as many students to mastery as possible during classwide intervention. It is possible. Students will get there. And the investment will pay off for you with better learning and more content coverage in the long run.

It is not true that students with processing disorders cannot attain instructional and mastery criteria. One or two low-performing students will not prevent your class from moving forward, and these one or two students would certainly be recommended for individual intervention, which arguably is the correct level of support for such students. Individual intervention is customized to a specific student’s learning needs via diagnostic assessment, and often, such students require acquisition and fluency building for prerequisite skills to enable success in grade-level content. In fact, we recommend that students who receive individual intervention be allowed to continue in classwide intervention if possible because at some point their skill gap will close sufficiently that they will derive greater benefit from classwide intervention.

We recommend focusing on improving your Scores Increasing metric in your dashboard, which is an indicator of overall growth during classwide intervention. We have many resources in Support to help teachers attain upward growth in classwide intervention.

Does SpringMath work well with special populations?

(e.g., students receiving special education, students who are struggling in math, advanced students)

Fortunately, two randomized, controlled trials were conducted that included students at risk for math difficulties and disabilities. Analyses were conducted to examine effects for students in intervention groups who met the criteria for being at risk for math difficulties (e.g., scoring 1 SD below the mean on last year’s state test, scoring 2 SDs below the mean on last year’s state test), and for students receiving special education services. In general, in populations with greater risk to start, when assigned randomly to SpringMath intervention, those students experience a much stronger risk reduction (because they had more risk to start). Cost-benefit analyses indicate that as risk increases, the return on investment in implementing SpringMath intervention actually improves (i.e., lower ICERs). The National Center for Intensive Intervention (NCII) requires reporting of intervention effects for students scoring below the 20th percentile on an external measure (i.e., a population considered to be at risk or in need of supplemental instruction). On the standard curriculum-based measures, effect sizes ranged from 0.56 to 1.11 for students scoring below the 20th percentile on the preceding year’s state test and 0.19 to 0.73 for students receiving special education services under any eligibility category. On the year-end test for grade 4 students, the effect size was 0.79 for students scoring below the 20th percentile on the preceding year’s test and 0.35 for students receiving special education services.

For advanced students, we recommend using the support portal to access more challenging lessons, practice materials, games, and word problems to enrich students’ experiences during core math instruction or during the supplemental enrichment period.

How can educators use SpringMath data to write IEP goals and objectives?

Ideally, students receiving special education services would have routine access to the most intensive instruction available in a school setting. This ideal is not often (or even rarely) the reality. Most schools have to make decisions about how to deploy limited resources toward meeting the needs of all learners. Still, it is worth a team’s consideration when planning instructional calendars and daily schedules to think about the ideal of making the most intensive instruction available to the students with the greatest demonstrated needs. Systems can use SpringMath to deliver intensive intervention to students on IEPs daily in a highly efficient manner. Students on IEPs can participate in core instruction in the general education setting if that is their usual plan and can participate in classwide math intervention with their core instructional grouping. If they are identified as needing more intensified instruction, SpringMath will identify and recommend them for diagnostic assessment and intervention. If SpringMath does not recommend them, but you want to provide them with individual intervention anyway, anyone with coach-level access can schedule any student in the school for individual intervention by clicking on the dots next to the student’s name in the Students tab.

Diagnostic assessment begins with grade-level screening skills (we call these the Goal skills) and samples back through incrementally prerequisite skills, assessing each skill systematically, to identify which skills should be targeted for intervention. We call the targeted skill the Intervention skill. Weekly assessment is conducted on the Intervention skill and the Goal skill. For IEP goals, we suggest instructional-range performance on current Goal skill scores and future Goal skill scores if the IEP crosses years. Logical objectives for IEPs include mastery of the Intervention skills. Drs. VanDerHeyden and Muyskens are always happy to advise systems on using SpringMath for IEP planning and implementation.

What accommodations are allowed?

Several accommodations are permitted, including adjusting response formats (students can respond orally, in writing, or in some cases, by selecting responses). Problems can be read aloud to students. Instructions can be provided to students in their native languages. Teachers can work one on one to deliver assessment and/or intervention. Assessments can be repeated under optimal conditions (using rewards, quiet space, warm-up activities). Timings can be adjusted and prorated to obtain the needed answers correct per unit of time equivalent scores. For example, a child can work for a minute and the score can be doubled to estimate what the performance would have been over two minutes. If a child seems anxious about being stopped after two minutes, the teacher can mark the stopping point at two minutes and allow the child to keep working, reporting the score as the number of answers correct completed at the two-minute mark.

Assessment-related FAQs

SpringMath uses a novel approach to math screening that enables more sensitive detection of learning needs and more sensitive monitoring of learning gains. The measures work in tandem with the instructional protocols so that students are matched with the right interventions when they are needed. In other words, the SpringMath measures are the “dropping in” points to intervention and must be administered even if you are using another screening in your school. Technical adequacy data reported and evaluated on the Academic Screening Tools’ Chart at the National Center for Intensive Intervention indicate that SpringMath screening measures are technically strong and suitable for universal screening in math. Additionally, SpringMath is the only tool on the market that uses classwide intervention as a second screening gate, which is the recommended approach to screening in MTSS when many students in a system are experiencing academic risk. If your school uses another math screening already, we suggest using the second screening as an external measure of the effectiveness of SpringMath in your system. These data can be uploaded into the program evaluation feature, and we will report dosage and effects on SpringMath measures and the external measures of your choice.

First, SpringMath is an in-tandem assessment and intervention system that improves math achievement for all students. To accomplish the goal of improving achievement for all students, the assessments used in SpringMath are necessary to drive the instructional recommendations and actions. As detailed in the previous question, it is not possible to substitute other measures to make the instructional decisions within SpringMath. SpringMath measures have specific cut scores that are specific to each measure and the measures are used in multiple ways to drive implementation. For example, the measures are used to feed metrics in coach dashboards, which reflect intervention dosage and drive implementation support. In effect, every assessment datapoint aggregates up from the single student to power all kinds of important MTSS decisions.

Second, SpringMath measures are currently unique in the marketplace. No other system of mastery measurement is currently available. Mastery measurement is a necessary feature in math MTSS because general outcome measures do not have sufficient sensitivity to power the MTSS decisions (screening, progress monitoring) (VanDerHeyden, Burns, Peltier, & Codding, 2022). So given this rationale, it would certainly be possible for schools to undertake building their own measures. After all, that is where Dr. VanDerHeyden began this work in 2002: building measures that were necessary but unavailable at that time. What followed was 20 years of research and development that created the 145 reliable, valid, sensitive, and technically equivalent generated measures with tested cut scores and decision rules of SpringMath.

Building measures is technically challenging and requires measurement expertise. Building measures also requires a tremendous investment of time. Building the SpringMath measure generator took several years. Before the measures were used with children, our research team generated and tested over 45,000 problems. SpringMath measures were built based on 20 years of research led by Dr. VanDerHeyden and that research continues today via our own programs of research and those led by independent researchers in school psychology and education. Importantly, the program of research to date demonstrates not just the technical adequacy of the measures, but their value within MTSS to improve math achievement for all students and close opportunity gaps. This type of evidence is called “consequential validity” and it is especially lacking among many assessment tools. When there is not research to show that the scores are used to improve outcomes, then the assessment might just invite problem admiration and reflect wasted resources (e.g., instructional minutes) because the use of the assessment did not bring benefit to students.

It is an important ethical mandate of school psychology and educational assessment in general that children be subjected to assessments that meet certain technical expectations (AERA/APA/NCME, 2014) such that the scores can be considered reliable, valid, equitable, and useful to the student. Thus, it is a very questionable practice to use measures that have not been subjected to technical validation. Few systems have the bandwidth to develop and then sufficiently evaluate measures for use within their systems. And if they did, the effort required to do so is not free. It would involve a significant investment of resources that likely would be greater than simply adopting a research-based set of measures. Teams may decide that SpringMath is not a good fit for their district, of course, but teams should follow the ethical tenet of educational assessment in adopting measures that meet conventional technical adequacy standards. Fortunately, implementers can identify measures that suit their needs by consulting NCII Tools Charts (www.intensiveintervention.org).

Unlike other tools, SpringMath emphasizes targets based on functional benchmark criteria. Many tools use simple norm-referenced cut scores to determine risk. For example, students below the 20th percentile are considered at risk and in need of supplemental intervention. Students below the 40th percentile might be considered at “some risk.” Such criteria are highly error prone. First, it is very possible to be at the 50th percentile and still be at risk, and conversely, it is possible to be at the 20th percentile and not be at risk. Simple norm-referenced criteria ignore local base rates. Local base rates reflect the amount of risk in a given classroom (which is a more direct reflection of the quality of instruction and rigor of expectations received in that setting). Second, many schools do not have normal distributions of performance, and norm-referenced criteria will be highly error prone in such contexts. Third, percentile criteria used by commercial vendors are based upon the convenience samples available to them from their current user base. Fourth, simple percentile criteria are arbitrary. Functionally, what makes the child at risk at the 20th percentile but not at risk if scoring at the 21st percentile? Is one percentile change equal to one less meaningful unit of risk? How can such risk be explained to parents? In contrast, benchmark criteria are meaningful and easy to explain to parents. SpringMath uses criteria that indicate meaningful outcomes like an absence of errors (or stated another way, a high degree of accuracy), likelihood of skill retention, and flexible skill use (i.e., faster learning of more complex, related content and application or generalized performance). We recommend (and SpringMath uses) a dichotomous decision — at risk or not at risk -- because having a third layer of “some risk” is difficult for systems to act on. Specifically, at screening, we require that 50% of students in a class score at or above the “instructional target.” The instructional target is the threshold for the instructional range, indicating that a skill has been acquired and students are independently accurate. During intervention, we require students reach the mastery target. Mastery is the level of performance that is most strongly associated with skill retention, application/generalization, and flexibility. Mastery-level proficiency is most strongly associated with scoring in the proficient range on more comprehensive and distal measures like year-end tests.

The SpringMath targets are designed to maximize efficiency and accuracy of decision making at each decision point in MTSS. For example, requiring the median to reach mastery before the class advances to the next skill during classwide intervention increases the number of students who are at least instructional. Thus, students who remain in the frustrational range, when the class median has reached mastery, can readily be identified and recommended for individual assessment and intervention. SpringMath targets are based on decades of academic screening research and are routinely evaluated to ensure continued accuracy and efficiency.

In math, some skills are more complex than others and complexity is highly reflected by the digit correct responses required to correctly solve a problem. In math, digits are usually counted for each operational step and for each place value position. One simple way to think about digits correctly is that it is similar to giving partial credit. But digits correct can be very complicated to score, especially given more challenging skills with multiple solution paths (e.g., simplification of fractions). Thus, SpringMath has identified the answers correct equivalent scores that reflect instructional and mastery performance for all 145 skills K-12. Easier skills (which also appear at lower grade levels) require more answers correct because each answer may require only one- to two-digit responses, whereas more challenging skills (which appear at the higher grades) may require only a few answers correct because each answer may be worth 25-30 digits correct.

We calculate Rate of Improvement (ROI) using ordinary least squares regression, which is the conventional way to compute ROI in curriculum-based measurement. In effect, using each data point in a series, it finds the linear trend (line) that is nearest to all the data points or represents the “line of best fit.” Typically, ROI is reported as the change in number of answers correct per unit of time (one, two, or four minutes) per week. If you were graphing this, you’d graph each score as the date (on the x-axis) by the progress monitoring score (on the y-axis). So basically, ROI is how much the score went up each week. So, on a two-minute measure, if at week one the score was 30, at week two the score was 35, and at week three the score was 40, then the ROI would be five answers correct per two minutes per week.

The ROI calculation used in SpringMath is consistent with recommended practices in RTI. Yet, ROI can never be interpreted in a vacuum. ROI depends on many things, including the efficacy of core instruction, the intensity and integrity of intervention, the scope of the intervention target, the sensitivity of the outcome measures, and the agility (and frequency) with which the intervention is adjusted to maintain alignment and intensity of the intervention. Determining what ROI is considered adequate raises other problems. Aimlines draw a line from starting performance to desired ending performance over some selected period of time, and ROI that is similar to the aimline is considered sufficient. Aimlines are easy to understand and highly practical; however, they are greatly influenced by the starting score (baseline) and the amount of time allocated to the intervention trial, which is often arbitrary.

Spring Math helps by automating decision making at each stage of the RTI process to limit potential “confounds” or errors that can threaten the validity of the ultimate RTI decision. SpringMath also emphasizes comparison between student ROIs and class ROIs during classwide intervention where students are receiving the same intervention layered on the same core instructional program with the same degree of integrity. SpringMath reports ROI for goal skills during individual intervention (rather than target skills) because goal skills will have the greatest number of available data points from which to estimate ROI. Teams can use the ROI metric to characterize student progress across phases of the intervention. For a more detailed discussion and case study in the use of an RTI identification approach, see https://www.pattan.net/multi-tiered-system-of-support/response-to-intervention-rti/rti-sld-determination.

Teachers and parents worry about math anxiety, and some math education experts caution against tactics used in math class, such as timed tasks and tests, which might theoretically cause or worsen anxiety (Boaler, 2012). Such assertions are theoretical but seem to really resonate with some teachers and parents and get repeated as “fact” in the media. Yet, evidence does not support that people are naturally anxious or not anxious in the context of math assessment and instruction (Hart & Ganley, 2019). Rather, math anxiety is bi-directionally connected to skill proficiency. In other words, students with weaker skills report more anxiety, and students who report more anxiety have weaker skills. Gunderson, Park, Maloney, Bellock, and Levine (2018) found that weak skill reliably preceded anxiety, and anxiety further contributed to weak skill development. They found that anxiety could be attenuated by two strategies: improving skill proficiency (this cannot be done by avoiding challenging math work and timed assessment) and promoting a growth mindset (as opposed to a fixed ability mindset) using specific language and instructional arrangements. Namkung, Peng, & Lin (2019) conducted a meta-analysis and found a negative correlation between anxiety and math performance (r = -.34). This relationship was stronger when the math task involved multiple steps and when students believed that their scores would impact their grades. Interestingly, Hart and Ganley (2019) found the same pattern with adults. Self-reported adult math anxiety was negatively correlated with fluent addition, subtraction, multiplication, and division performance (r = - .25 to - .27) and probability knowledge (r = - .31 to - .34). Self-reported test-taking anxiety was negatively correlated with math skill fluency and probability knowledge, too (r = -.22 to - .26). One must wonder with these emerging data whether math anxiety has been oversimplified in the press. In any case, avoiding challenging math tasks is not a wise response when teachers worry about math anxiety because that will only magnify skill deficits, which in turn will worsen anxiety. Finally, some teachers believe that timed activities are especially anxiety-causing for students. Existing research that included school-age participants does not support the idea that timed assessment causes anxiety, no matter what thought leaders have speculated (Grays, Rhymer, & Swartzmiller, 2017; Tsui & Mazzocco, 2006).

Fortunately, there is much that teachers can do to help students engage with challenging math content and limit possible anxiety in the classroom. Research data suggest that exposure to math instruction with opportunities for brief timed practice opportunities, provided in low stakes ways, using pre-teaching and warm-up activities, and focusing on every child “working their math muscles to get stronger and show growth” are powerful mechanisms to reduce anxiety while also building student skill. Verifying prerequisite skill mastery improves student engagement, motivation, and self-efficacy. SpringMath interventions include all these specific actions to mitigate anxiety and build mathematical success, which is protective for students in a multitude of ways, including preventing future math anxiety.

One final comment here. It is critical that adults set the tone for practice with challenging math content for which students have mastered the prerequisites in a way that presumes learning is possible without anxiety. In popular media, it seems that some teachers have been made afraid to provide timed practice activities and one of the authors of this text recently read a posting in social media from a math teacher that referenced “teacher’s autobiographical histories” as a source of evidence in presuming this inevitable relationship of math anxiety given any timed activities. This kind of presumption is inappropriate because it can lead teachers to avoid using tactics that are highly effective in building math mastery for all students. Teachers’ recalled experiences of their own learning in elementary school are interesting and certainly personally meaningful to the teacher. But they are not a source of evidence that should guide how instruction is provided. Evidence seeks to identify findings that are generally meaningful and to limit alternative explanations for findings (i.e., confounds). A teacher’s own experience (or even collectively most teachers’ experiences) even if presumed perfectly accurate and reliable reported many decades after the experience was lived and without regard to the context in which the experience was lived is still not generally applicable because it would pertain to only the universe of future teachers. In other words, it is possible that other students in the same environment given the same exposure did not experience anxiety and maybe those students became future engineers, physicians, and such. We know that teaching is a human enterprise and that teachers must provide an environment in which students feel supported to learn. We believe and encourage teachers to follow the evidence to support learners to meet challenging content and to grow incrementally toward mastery. We believe this means using brief timed practice opportunities in low stakes ways having ensured students' mastery of prerequisite skills. That’s exactly how SpringMath works.

Schools collect lots of student data during a school year but often fail to consider and respond to what those data say about the effects of instruction in the school. MTSS is a powerful mechanism to evaluate local programs of instruction, allowing leaders and decision teams to ask questions about specific program effects, effects of supplemental supports, reduction of risk among specific groupings of students, and progress toward aspirational targets like students thriving in more advanced course sequences over time (Morrison & Harms, 2018). SpringMath is designed to aggregate data in useful ways to facilitate systemic problem solving. From the coach dashboard, teams can view progress at the school, grade, class, and student level in easy-to-read summary reports and graphs. Assisted data interpretation is always provided so implementers do not have to guess about what the data are telling them. Because universal screening is part of SpringMath, systems can view improved proficiency across screening occasions on grade-aligned measures. With effective implementation, schools would want to see that percent of students not at risk improves across subsequent screenings (this can be viewed from the Screening tab and from the Growth tab, which is automatically updated as you implement). Schools would want to see that classes are mastering skills every three to four weeks during classwide intervention, that classes are meeting the mid-year goal marked in the Classwide Intervention tab, and that all students are growing each week during classwide intervention (Scores Increasing metric in the coach dashboard should be greater than 80%). The Growth tab should show that classes and grades are gaining in the percent of students not at risk across subsequent screenings (e.g., fall to winter and winter to spring) and that the final classwide intervention session brings most students into the not-at-risk range. Finally, systems can use Program Evaluation under their log-in ID to access a full program evaluation report that reports effects by school, grade, and class, along with dosage and recommends specific ways that schools might improve their effects.

This feature was released in the 2022-23 school year. It can be accessed from the drop-down menu attached to your log-in ID.

Security FAQs

The SpringMath software application is a published by Sourcewell. Sourcewell has implemented separate policies (generally the “Data and Security Policies” or “Data and Security Policy”) that include details about the manner in which Sourcewell protects the confidentiality and safety of educational data. Below is a list of current Data and Security Policies. Sourcewell reserves the right to update each Data and Security Policy from time to time in the ordinary course of business. The most recent version of each policy is available at the link on Sourcewell ’s website indicated below or by hard copy upon request.

(a) Data Privacy Policy (“Privacy Policy”), available at https://sourcewell.org/privacy-policy

(b) Minnesota Government Data Practices Act Policy (“MGDPA Policy”), available at https://sourcewell.org/privacy-policy

(c) Terms of Use – Sourcewell website, available at https://sourcewell.org/privacy-policy

(d) Information Security Policy (“Security Policy”), available upon request

Teachers have access to current year student data for those classes that they have been assigned to by the rosters submitted by the district or to classes as assigned by the district data administrator through the application. Coaches have access to schoolwide data for the current year and for prior years. The coach role is assigned by the authorized district representative. Data administrators submit the rosters for their districts and have access to all schools within the district. Data administrators are assigned by the authorized district representative.

Sourcewell acknowledges and agrees that customer data is owned solely by the customer. Sourcewell will not share or disclose customer data to any third party without prior written consent of the Customer. Sourcewell will ensure that any and all customer data shall be used expressly and solely for the purposes enumerated in Customer Agreements.

Sourcewell will restrict access of all employees and consultants to customer data strictly on a need-to-know basis in order to perform their job responsibilities. Sourcewell will ensure that any such employees and consultants comply with all applicable provisions of this Privacy Policy with respect to customer data to which they have appropriate access. Further details about data privacy can be viewed by accessing the Sourcewell Data Privacy Policy.

On August 1 of each year, SpringMath rolls forward to a new academic year. Currently, schools or data administrators need to submit a new roster or rosters of students for the upcoming school year.

Teachers can view only students who are enrolled in classrooms to which they are assigned, but coaches can view SpringMath data for prior years. Prior year data are maintained and can be accessed from your account from the drop-down menu on your log-in ID.

From the teacher dashboard (easily accessed from the coach’s dashboard), you can click into your Students tab. From there you can select any student and a summary of all data collected for that student relative to instructional and mastery targets and compared to the class median on the same skill is displayed in a series of graphs to help parents see exactly how their child is progressing during math instruction and intervention. Summary graphs can also easily be downloaded as a PDF, attached to an email and then shared with parents.

Additional Resources

References

Boaler, J. (2012). Commentary: Timed tests and the development of math anxiety: Research links “torturous” timed testing to underachievement in math. Education Week. Retrieved from https://www.edweek.org/ew/articles/2012/07/03/36boaler.h31.html

Burns, M. K., Aguilar, L. N., Young, H., Preast, J. L., Taylor, C. N., & Walsh, A. D. (2019). Comparing the effects of incremental rehearsal and traditional drill on retention of mathematics facts and predicting the effects with memory. School Psychology, 34(5), 521–530. https://doi.org/10.1037/spq0000312

Codding, R., VanDerHeyden, Martin, R. J., & Perrault, L. (2016). Manipulating Treatment Dose: Evaluating the Frequency of a Small Group Intervention Targeting Whole Number Operations. Learning Disabilities Research & Practice, 31, 208-220.

Compton, D. L., Fuchs, L. S., Fuchs, D., Lambert, W., & Hamlett, C. (2012). The cognitive and academic profiles of reading and mathematics learning disabilities. Journal of Learning Disabilities, 45(1), 79–95. Retrieved from https://doi-org.ezproxy.neu.edu/10.1177/0022219410393012

Duhon, G. J., Poncy, B. C., Krawiec, C. F., Davis, R. E., Ellis-Hervey, N., & Skinner, C. H. (2020) Toward a more comprehensive evaluation of interventions: A dose-response curve analysis of an explicit timing intervention, School Psychology Review, https://doi.org/10.1080/2372966X.2020.1789435

Fuchs, L. S., Fuchs, D., & Malone, A. S. (2018). The taxonomy of intervention intensity. Teaching Exceptional Children, 50(4), 194–202. doi:10.1177/0040059918758166

Grays, S., Rhymer, K., & Swartzmiller, M. (2017). Moderating effects of mathematics anxiety on the effectiveness of explicit timing. Journal of Behavioral Education, 26(2), 188–200. doi:10.1007/s10864-016-9251-6

Gunderson, E. A., Park, D., Maloney, E. A., Beilock, S. L. & Levine, S. C. (2018) Reciprocal relations among motivational frameworks, math anxiety, and math achievement in early elementary school. Journal of Cognition and Development, 19, 21–46. doi:10.1080/15248372.2017.1421538

Hart, S. A., & Ganley, C. M. (2019). The nature of math anxiety in adults: Prevalence and correlates. Journal of Numerical Cognition, 5, 122–139.

Morrison, J. Q., & Harms, A. L. (2018). Advancing evidence-based practice through program evaluation: A practical guide for school-based professionals. Oxford University Press.

Namkung, J. M., Peng, P., & Lin, X. (2019). The relation between mathematics anxiety and mathematics performance among school-aged students: a Meta-analysis. Review of Educational Research, 89(3), 459–496. hdoi: 10.3102/0034654319843494

AERA/APA/NCME (2014). Standards for educational and psychological testing. Washington, DC: AERA.

Powell, S. R., & Fuchs, L. S. (2015). Intensive intervention in mathematics. Learning Disabilities Research & Practice (Wiley-Blackwell), 30(4), 182–192. doi:10.1111/ldrp.12087

Schutte, G., Duhon, G., Solomon, B., Poncy, B., Moore, K., & Story, B. (2015). A comparative analysis of massed vs. distributed practice on basic math fact fluency growth rates. Journal of School Psychology, 53, 149-159. https://doi.org/10.1016/j.jsp.2014.12.003

Solomon, B. G., Poncy, B. C., Battista, C., & Campaña, K. V. (2020). A review of common rates of improvement when implementing whole-number math interventions. School Psychology, 35, 353-362.

Tsui, J. M., & Mazzocco, M. M. M. (2006). Effects of math anxiety and perfectionism on timed versus untimed math testing in mathematically gifted sixth graders. Roeper Review, 29(2), 132–139. doi:10.1080/02783190709554397

VanDerHeyden, A. M., Burns, M. K., Peltier, C., & Codding, R. S. (2022). The Science of Math – The Importance of Mastery Measures and the Quest for a General Outcome Measure. Communique, 51 (1).

VanDerHeyden, A. M., Witt, J. C., & Gilbertson, D. A (2007). Multi-Year Evaluation of the Effects of a Response to Intervention (RTI) Model on Identification of Children for Special Education. Journal of School Psychology, 45, 225-256. https://doi.org/10.1016/j.jsp.2006.11.004